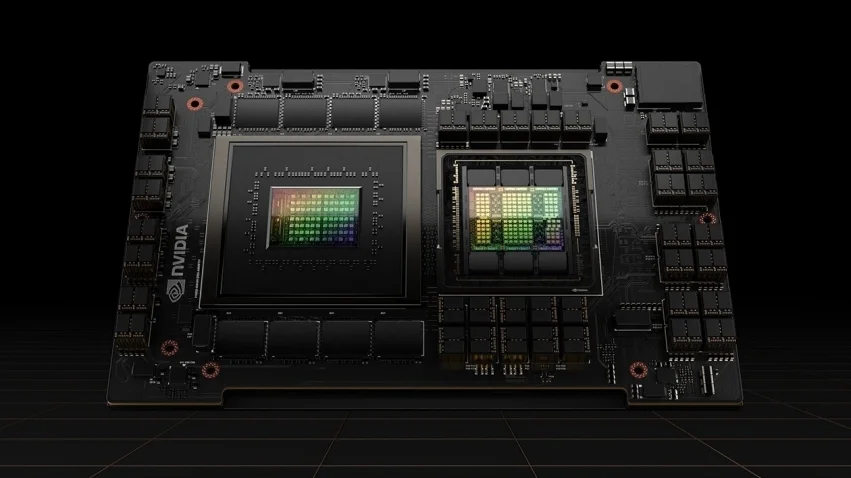

NVIDIA H100

14,592

CUDA Cores

138GB

VRAM

3000

GB/s

Technical Specifications

14,592

CUDA Cores

1410

Base MHz

1830

Boost MHz

138GB HBM3

5120-bit bus

Performance

67

FP32 TFLOPS

2000

FP16 TFLOPS

700W

TDP

Cloud Availability

7

Available Instances

$1.47/hr

Starting Price

Detailed Specifications

| Architecture | Hopper (Unknown) |

| Release Date | 2023-03-21 |

| Launch Price | $30,000.00 |

| Process | 4nm |

| Transistors | 80B |

AI Features

Gen 4

Tensor Cores

Enabled

Transformer Engine

Supported

Flash Attention

Physical Specifications

Dimensions

10.5in

Length

4.4in

Width

2-slot

Height

Top GPUs for Training and Inference

| Category | Rank 1 | Rank 2 | Rank 3 |

|---|---|---|---|

| Best for Training | NVIDIA H200 | NVIDIA H100 | NVIDIA B200 |

| Best for Inference | NVIDIA A40 | NVIDIA A100 | NVIDIA A10 |

Compare GPU specifications and cloud instances to find the best GPU for your workload.